The real question for coders is not whether AI will replace coders, but why it won’t. AI coding assistants can easily generate, review, and complete code, reducing the workload of a coder. According to Gartner, 75% of software engineers will even use AI code assistants by 2028.

However, AI doesn't just create opportunities for companies; it also introduces risks, which raises the question of how programmers can use AI code assistants safely and mitigate concerns.

The Impact of coding assistants in STEM Sectors

The first question is: What is a coding assistant? According to the French Cybersecurity Agency and the German Federal Office for Information Security, AI coding assistants are models trained on large amounts of text and then refined using source code or trained directly on large datasets of source code. This means that these assistants don’t create innovative and unique code; rather, they learn from vast databases and suggest multiple alternatives based on patterns from the training data.

Impact for Employers

In the STEM sectors, AI is revolutionising how companies operate. Employers can use AI to automate complex data analysis, speeding up research and development processes. An analysis of McKinsey indicates that the demand for certain occupations could rise significantly by 2030, especially in STEM and Health Care. The need for STEM and health professionals is expected to grow by 17 to 30 percent between 2022 and 2030, resulting in seven million new jobs in Europe and an another seven million in the United States.

Generative AI could automate up to 30 percent of work hours by 2030 in both Europe and the United States. For STEM professionals in Europe, the integration of generative AI could more than double the percentage of automated work hours, increasing from 13 percent to 27 percent.

Impact for Employees

As AI tools become more advanced, there is a growing concern that they could take over from developers. However many developers within the industry actually see more potential for AI assisting them in their jobs, rather than replacing them. According today from BJIT, 66% of developers are already using ChatGPT for tasks like fixing bugs or improving existing code. And 77.8% believe that using AI actually improves code quality. AI’s greatest potential could be to take over certain repetitive or routine tasks like bug fixing and simple automation scripts, which is why 64.9% of developers think AI will even enhance the quality of a programmer’s work.

As advanced as AI is getting, only 13.4% believe it actually will be replacing programmers in the next five years.

The risks of AI Coding Assistants

AI is rapidly transforming many industries, and the field of programming is no exception. For programmers, AI presents both opportunities and risks. While AI tools can help automate repetitive tasks, optimise coding workflows, and even assist with debugging, there are several potential risks to consider:

Overreliance on AI

Overreliance on AI tools, such as code auto-completion, AI-driven code generation, or low-code/no-code platforms, could limit a programmer's ability to deepen their understanding of core concepts, algorithms, and problem-solving skills. If a problem arises in the code, programmers who rely too heavily on AI may struggle to solve the bug or improve the AI-generated code due to their lack of deeper technical knowledge.

Another consequence is that some programmers may not be able to detect bugs or identify poor code because they haven't developed their programming skills in the same way as those who don't rely on AI. This could lead to a skills gap between coders who depend on AI and those who don't. Additionally, relying solely on AI-driven code could stifle creativity, reducing innovation and new code generation, which may ultimately lead to poorer code quality.

Quality and Reliability Concerns

Because coders don’t fully understand the code generated by AI, there are concerns about quality and reliability. AI tools are not perfect and can generate code that might not follow best practices or be optimised for performance. There is also the risk of introducing subtle bugs that AI may not catch, particularly when dealing with complex or nuanced code. Programmers may end up spending more time debugging AI-generated code than writing it themselves, which undermines productivity and trust in AI tools.

A study by Purdue University on the quality of ChatGPT's responses to programming questions revealed some interesting findings. The study found that ChatGPT provided incorrect answers 50% of the time, and 77% of the answers were overly verbose. Despite this, participants still preferred AI-generated code 35% of the time. However, a notable takeaway from the study is that participants overlooked misinformation from the chatbot 39% of the time.

These AI-generated codes are not only often incorrect but also insecure, largely due to outdated data. Therefore, AI-generated code is unreliable unless thoroughly reviewed by a human coder. Coders who review AI-generated code should have a deep understanding of coding, conduct security checks, and flag any problematic AI-generated code blocks.

Automation Bias

According to Forbes, automation bias refers to “Automation bias refers to our tendency to favour suggestions from automated decision-making systems and to ignore contradictory information made without automation, even if it is correct.”

AI systems are trained on large datasets, which may carry inherent biases or ethical concerns that can be inadvertently introduced into the code they generate. Automation bias can have severe consequences, especially in critical sectors like healthcare and public transportation. There have been several tragic incidents, such as the crash of Air France Flight 447 in 2009, where automation bias played a significant role.

Intellectual Property (IP) Risks

One of the key intellectual property (IP) risks associated with AI-generated code is the potential for copyright infringement. AI tools are typically trained on large datasets, which may include publicly available source code, some of which is protected by copyright. As a result, AI-generated code could unintentionally replicate parts of copyrighted code, leading to unintentional IP violations. This is particularly concerning when the generated code is used in commercial products, as it could expose companies to legal challenges or lawsuits from the original code's owners. Additionally, determining the ownership of AI-generated code can be complex, as it may be unclear whether the developer, the company behind the AI tool, or the AI model itself owns the rights to the output.

Another IP risk is open-source license "tainting." AI tools use public code sources and databases like GitHub, which may contain code licensed under copyleft licenses (e.g., GPL). If AI-generated code includes open-source code under a copyleft license, it could override any proprietary or other open-source licenses protecting the code. This could lead to conflicts and legal risks, such as issues with patent rights and compliance with license terms.

Security Risks

A Standford study revealed that programmers who used AI to execute security-related programming tasks wrote less secure code than those who didn’t. Another surprising finding was that programmers who used AI were more likely to believe they had written code without security weaknesses.

Similarly, New York University conducted a study testing the safety of coding assistants, specifically Copilot. In their study, they produced 89 different scenarios for Copilot to complete, resulting in 1,689 generated programs. The results showed that approximately 40% of the code was vulnerable to cybersecurity weaknesses.

AI tools may inadvertently generate insecure code, either due to vulnerabilities in the AI’s training data or because they fail to account for edge cases in the code. This could expose software to hacking or exploitation. Programmers must exercise extra caution and thoroughly review AI-generated code for security flaws, as relying solely on AI may overlook critical aspects of secure coding practices.

How can Programmers use AI safely?

The conclusion is that AI is essential for companies that want to stay ahead of the competition and lead in their specific sector. However, AI should not be used in coding without proper quality validation and security measures. The next question is: How can programmers use AI safely and effectively?

The French Cybersecurity Agency and the German Federal Office for Information Security published an insightful and thorough report on how to use coding assistants safely, considering the associated risks. Here are some key recommendations:

- Stay up-to-date: Coders should continuously develop their programming skills and stay informed about new technologies and security measures. Learning and development (L&D) is crucial in this aspect, and this can be implemented both internally and through collaboration with external AI experts.

- Management: It's not only employees who need to adapt to AI, but also employers. Companies can mitigate the risks associated with coding assistants by establishing clear guidelines, providing safety training, and conducting risk analyses. Additionally, they should remember that finding skilled programmers remains the most important factor in ensuring the quality and safety of code.

- Carefully review AI-generated code: AI is a powerful tool for automating tasks and generating code, but it’s critical for experienced coders to review the AI-generated code thoroughly. Programmers should check for biases, security vulnerabilities, IP risks, and other potential issues. In short, AI should be used critically and with caution.

Applications of AI Code Assistants

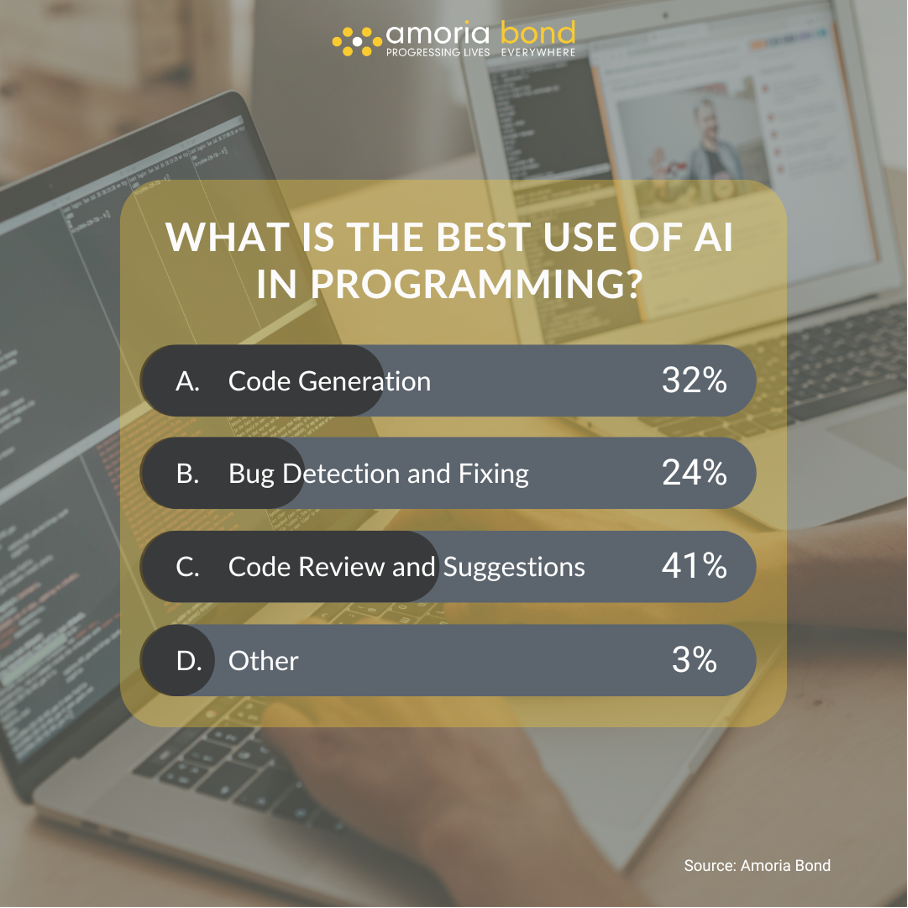

Before we dive into the applications of AI coding assistants, we asked our LinkedIn network what they thought was the best use of AI in programming. Our poll was answered by 71 people and this is their answer:

Code Generation

Although using AI for code generation comes with risks, it remains a valuable tool when used correctly. In fact, 32% of our respondents indicated that code generation is the most useful application of AI in programming. Popular code generation tools include GitHub Copilot, CodeT5, and Tabnine.

Thanks to recent advancements in large language model (LLM) technologies and natural language processing (NLP), AI can now easily generate code using deep learning algorithms and extensive neural networks. These tools are trained on vast datasets of publicly available source code, enabling them to generate code based on plain-text prompts that describe the desired function. The AI then provides several code examples in response. Code generation is particularly helpful for automating repetitive tasks, generating functions, translating code to other languages, and completing code blocks, all of which streamline the coding process.

In general, code generation offers significant benefits for programmers. However, it’s important to remember that AI is best suited for simple code generation, translation, or completion. For more complex coding tasks, it's advisable to avoid relying on AI in order to prevent potential flaws.

Bug Detection and Fixing

In a 2018 report, Stripe analysed the impact of AI on coding and found that developers typically spend more than 17 hours per week detecting and fixing bugs. This time-consuming task could be significantly streamlined by AI tools, if used properly.

AI can test code by generating its own test cases and even correcting errors when necessary. This helps save programmers time and effort, allowing them to focus on other tasks. However, it’s still essential for developers to review the AI-corrected code and maintain a deep understanding of the changes made to ensure both quality and safety.

Code Review and Suggestions

Most respondents (41%) use coding assistants to enhance and review their existing code. AI can assist in code review by detecting errors, inefficiencies, and security vulnerabilities. It automatically flags issues such as syntax errors, logic flaws, and performance bottlenecks, and offers suggestions for optimisation, like recommending better algorithms or more secure coding practices. AI can also identify outdated or insecure libraries and suggest safer alternatives, thereby improving overall code quality.

In addition to error detection, AI contributes to code maintainability by suggesting refactoring opportunities, such as breaking down large functions or simplifying complex logic. It can also generate documentation and comments to improve code clarity and adherence to best practices. Furthermore, AI can propose unit tests to ensure comprehensive test coverage, offering continuous, automated feedback to help maintain high-quality code.

Other

Only 3% of our LinkedIn network indicated that they use AI tools for something else than code generation, fixing bugs or code review. Here are some other options programmers can use AI for:

- Generating test cases

- Explaining codes

- Code Documentation

How Can Amoria Bond Help You Find the Best Programmers?

At Amoria Bond, we progress lives everywhere by conducting thorough and transparent hiring processes. But what does this mean, exactly? Our recruiters hold multiple interviews with technology specialists to assess their skills and ensure compatibility with your company’s needs.

We only select programmers who not only meet the job requirements but also align with your company’s culture and identity, leading to higher retention rates. We place a strong emphasis on knowledge and experience because we understand the complexities and risks involved in coding.

Would you like to learn more about our hiring process or services? Contact me or my team to discuss your hiring needs!